- ProductsSearch and BrowseRecommendationsCustomer Engagement

Visual search: The first-step toward multimodal future

Ecommerce search is undergoing its biggest shift since the keyword era. Shoppers no longer come with perfect queries - they come with intent, expressed through text, images, voice, or a mix of everything.

As AI reshapes how shoppers express what they want, retailers must move from “literal keyword matching” to “understanding what the shopper is trying to find.”

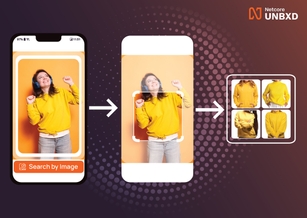

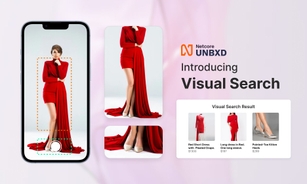

Visual search solves this gap by letting shoppers search the way they think, not the way they type.

Key Takeaways:

-

Visual search is becoming a core part of ecommerce discovery as shoppers rely on expressions of intent through images, text, and context rather than perfect keywords.

-

Moving toward multimodal search requires understanding shopper intent, and visual search is the most practical first step because it is intuitive, quick to adopt, and generates immediate improvements in conversion.

-

Visual search strengthens the entire discovery stack by enriching catalog attributes, improving metadata quality, and making product data more machine-readable.

-

It enhances the user journey by reducing zero-result pages, speeding up refinement, improving mobile experiences, enabling “complete the look” recommendations, and converting inspiration from social platforms into commerce actions.

-

Successful deployment depends on clean product images, consistent catalog attributes, and behavioral data that improves ranking over time.

-

High accuracy comes from a combination of strong image quality, enriched attributes, category hygiene, hybrid relevance models, and feedback loops.

-

Netcore Unbxd’s visual search stands out due to fast response times, multi-object detection, flexible API inputs, deep analytics, and seamless integration with semantic, vector, and keyword engines.

Why ecommerce is moving beyond keywords

Keyword search still works. But today’s shoppers expect discovery that feels:

-

Instant and frictionless : fewer clicks, fewer refinements

-

Visually intuitive : especially for fashion, beauty, home décor, lifestyle

-

Context-aware - recognizing style, attributes, categories, use-case

-

Personal - tuned to their preferences and behavior

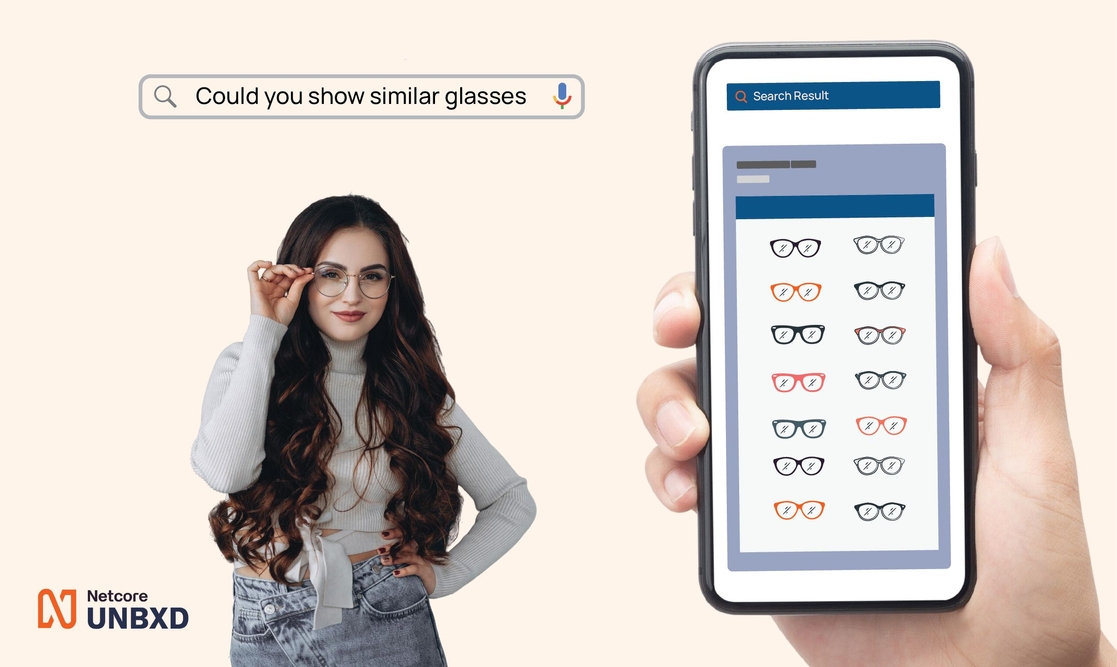

Multimodal search unlocks these experiences by letting shoppers express intent the way humans naturally do: through language + visuals + context.

So, the real differentiation is not the camera icon. It is the quality of the attributes, vectors, and hybrid ranking you build on top of that signal. That is where multimodal and agentic search start.

Visual search adoption and impact

Google reports that Lens processes billions of visual searches every month, with a significant share directly tied to shopping intent. Major retailers are seeing visual search usage grow sharply year over year, and industry studies consistently report 10–15 percent lifts in sales or conversion when image-based discovery is rolled out at scale.

As visual search tools mature, the gap between adopters and laggards will only widen.

Visual search beyond a single category

Although fashion and lifestyle brands were early adopters, visual search is now spreading across multiple industries. Fashion, beauty, furniture, and home decor use it to shorten the journey from inspiration to purchase. Marketplaces and logistics players rely on it to identify products from damaged labels, poor-quality photos, or incomplete part numbers.

Even industrial and field-service teams use visual search to recognize components in complex environments and trigger the right workflows. This cross-industry traction signals that visual search is becoming a foundational interface, not a niche feature.

Why visual search is the first practical step toward multimodal search

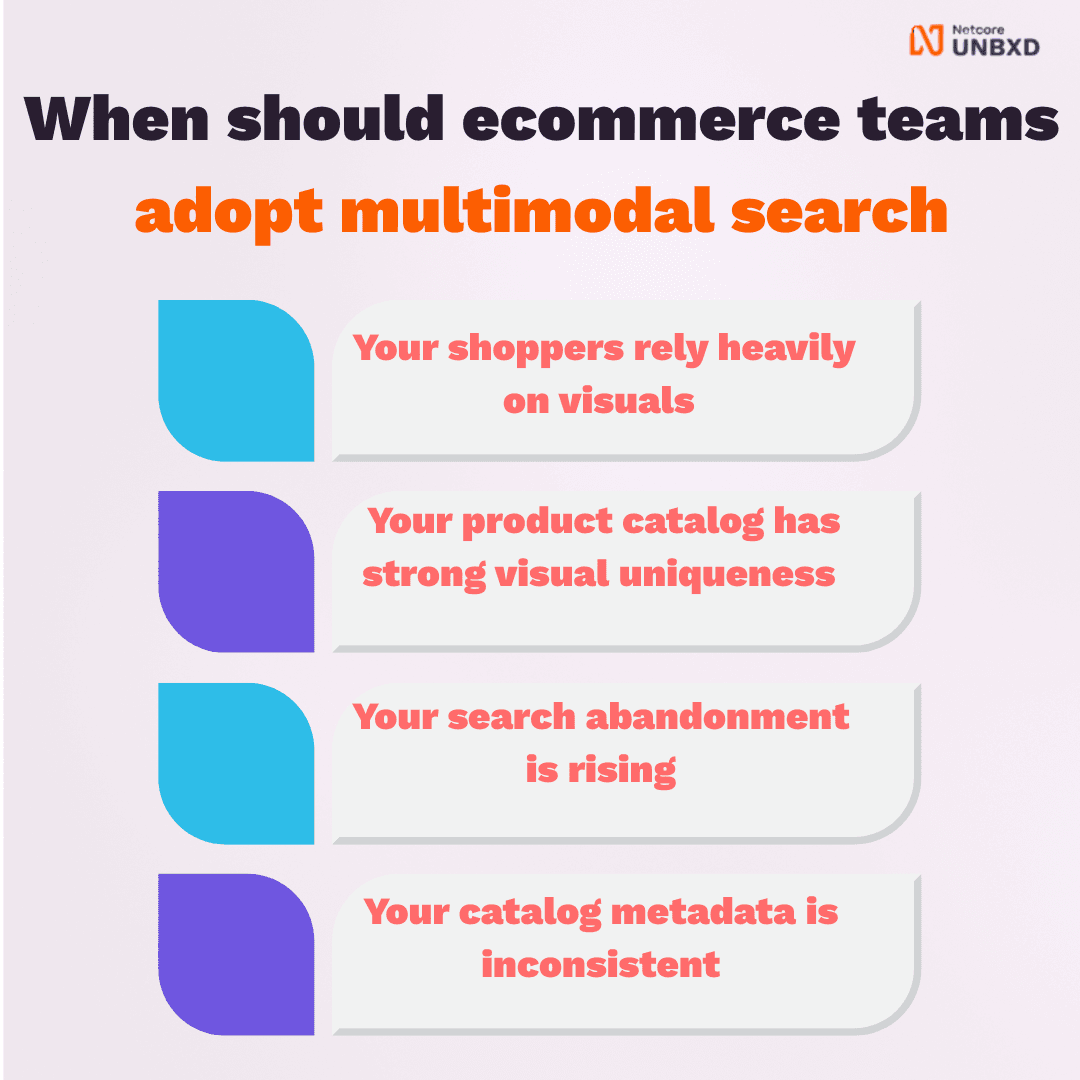

Multimodal search combines image + text + behavioral + contextual signals, but most retailers begin the transformation with visual search, because:

-

It’s easy for shoppers to use

-

It produces immediate conversion lift

-

Image understanding boosts accuracy across your entire search pipeline

-

It prepares product data for future agentic workflows

-

It makes the catalog “AI-readable” by enriching attributes

Visual search is the gateway to improved product discovery—and the foundation for more advanced AI-led capabilities.

UX improvements that visual search unlocks

Visual search improves the shopper journey across the funnel:

-

Removes the need for perfect keywords — a single upload gives instant context.

-

Reduces zero-result searches through visual intent recognition.

-

Shortens refinement cycles — shoppers skip filters and jump straight to relevant items.

-

Delivers “complete the look” recommendations using visual style matching.

-

Improves mobile search where typing long queries is inconvenient.

-

Boosts engagement by turning social inspiration into purchasable moments.

Setup complexity: what teams really need to know

Visual search may seem complex, but modern AI platforms simplify the heavy lifting.

What’s easy:

-

Plug-and-play APIs for uploading product images

-

Pre-trained vision models

-

Auto-extraction of visual attributes

-

Real-time ingestion and indexing

Where retailers must plan:

-

Maintaining consistent product image quality

-

Cleaning catalog metadata to align text + visual signals

-

Ensuring categories are correctly mapped

-

Identifying attributes that visual AI can enhance

This naturally brings us to a critical dependency: Attribute Enrichment.

Data requirements for accurate visual + multimodal search

Visual search accuracy depends on the quality of:

1. Product images

-

Clear, front-facing, uncluttered

-

Multiple angles

-

Standardized lighting

2. Catalog attributes

Visual models perform best when paired with strong metadata like:

-

Color

-

Material

-

Pattern

-

Fit

-

Silhouette

-

Occasion

-

Style

Retailers often have gaps here, which is why AI-led attribute enrichment becomes essential.

3. Behavioral signals

-

Clicks

-

Product views

-

Add-to-cart events

-

Category refinements

This behavioral data helps the model fine-tune rankings over time.

AI accuracy drivers: what determines great results

Visual search accuracy depends on multiple system-level components:

-

Image quality — the clearer the product, the better the model identifies it.

-

Attribute density — enriched catalogs > raw catalogs.

-

Label consistency — “navy blue” vs “blue/navy” slows down matching.

-

Category hygiene — miscategorized products degrade accuracy significantly.

-

Hybrid relevance models — vision AI + text retrieval + vector embeddings + user intent.

-

Feedback loops — more interactions → better ranking over time.

Netcore Unbxd’s hybrid model combines vision understanding, entity recognition, vector search, and behavioral ranking to ensure accuracy scales with catalog size.

Why Netcore Unbxd Visual Search stands out

An enterprise-grade visual search solution must handle noisy shopper photos, scale effortlessly with large and dynamic catalogs, and integrate smoothly with keyword search, filters, and recommendations. Netcore Unbxd Visual Search is built with these capabilities in mind and further optimized to achieve retailer-specific goals, including higher revenue, improved conversion rates, and fewer zero-result searches:

-

Detects objects, patterns, and colors within an image

-

Handles images of varying sizes, formats, and origins

-

Identifies multiple objects with bounding boxes

-

Returns visually similar results in under ~3 seconds

-

Flexible input formats through the Visual Search API

-

Integrates seamlessly with semantic, vector, and keyword engines

-

No-code setup and management via the Netcore Unbxd Console

-

In-depth analytics to measure revenue impact, user engagement, and historical trends

This creates a scalable, reliable, high-accuracy visual search experience tailored for product-rich ecommerce brands.

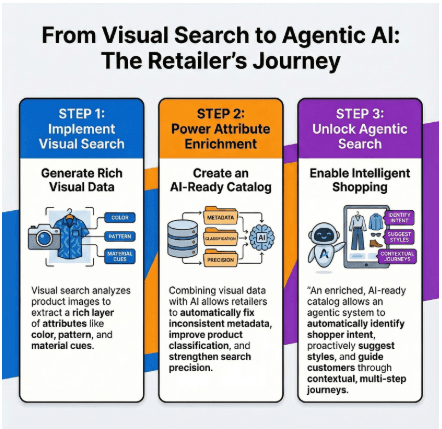

How Visual Search bridges into attribute enrichment and agentic search

Visual search generates a rich layer of visual attributes (color, pattern, material cues, structures) that enhance search ranking and retrieval. Combined with AI-led Attribute Enrichment, retailers can:

Visual search generates a rich layer of visual attributes (color, pattern, material cues, structures) that enhance search ranking and retrieval. Combined with AI-led Attribute Enrichment, retailers can:

-

Fix incomplete or inconsistent product metadata

-

Improve product classification and category mapping

-

Strengthen multimodal search precision

-

Provide richer signals for vector and semantic rankers

Once your catalog is fully enriched and AI-ready, you unlock the next stage: agentic search. Agentic search uses multimodal understanding to:

-

Reformulate vague queries

-

Identify shopper intent automatically

-

Suggest categories or styles proactively

-

Guide shoppers through contextual, multi-step journeys

Visual search → attribute enrichment → agentic search becomes the natural AI growth path for modern retailers.

Book a demo today to explore Netcore Unbxd visual search.

FAQ

How does visual search work on an ecommerce site?

On an ecommerce site, visual search starts when the shopper taps a camera icon or uploads a photo. Computer vision models analyse the image, detect the main product and its attributes, and generate vectors for matching. A hybrid relevance engine then ranks matching products using visual similarity, text signals, and real-time behavioural data, so results feel accurate and personalised.

What is the difference between visual search and image search?

Image search generally finds images on the web that match a keyword or picture. Visual search in ecommerce is more product-focused and intent-aware. It uses the uploaded image to infer attributes such as category, style, colour, and pattern, then returns shoppable products instead of generic image results, so shoppers move directly from inspiration to purchase.

Which product categories benefit most from visual search?

Visual search works best in visually led categories where shoppers care about style and aesthetics. Fashion, footwear, jewellery, beauty, furniture, home decor, and lifestyle products are strong fits. In these categories, shoppers often start with a look or reference image, so image-based discovery removes friction and reduces the gap between browsing and buying.

What data do retailers need for effective visual search?

Retailers need clean, high-quality product images, consistent catalogue structures, and rich attribute coverage to get the best results from visual search. Multiple angles, uncluttered backgrounds, and category hygiene all improve detection accuracy. When this is combined with behavioural data and click feedback, the AI can rapidly learn which visual matches convert for different shopper cohorts.

How does visual search connect to multimodal search?

Visual search is often the first-step toward a multimodal search experience where shoppers mix images, text, and filters in a single journey. A strong visual search pipeline produces high-quality attributes and vectors that feed into text search, recommendations, and conversational journeys. This lets shoppers refine an image-based query with words, questions, or filters without starting again.

How does Netcore Unbxd visual search improve product discovery?

Netcore Unbxd visual search combines visual signals, text relevance, and behavioural data inside one commerce search platform. The system cleans noisy real-world images, enriches product attributes, and uses a hybrid ranking model that adapts to category-specific behaviour. This helps retailers reduce zero-result sessions, improve search-to-cart conversions, and build a practical bridge to multimodal and agentic search experiences.